Neural networks are a type of machine learning algorithm that are modeled after the structure and function of the human brain. They are composed of many interconnected processing nodes, called neurons, which work together to perform complex tasks such as image and speech recognition, natural language processing, and decision making.

Neural networks are often used in supervised learning, where the algorithm is trained on a labeled dataset and learns to make predictions or decisions based on the examples it is given. For example, a neural network might be trained on a dataset of images labeled with the objects they contain, and then be able to recognize those objects in new images.

In this blog post, we will explore the building blocks of neural networks, the different types of neural networks, and how they are trained. We will also discuss some of the applications and challenges of using neural networks. By the end of this post, you will have a better understanding of how neural networks work and how they can be used in machine learning.

Building Blocks of a Neural Network

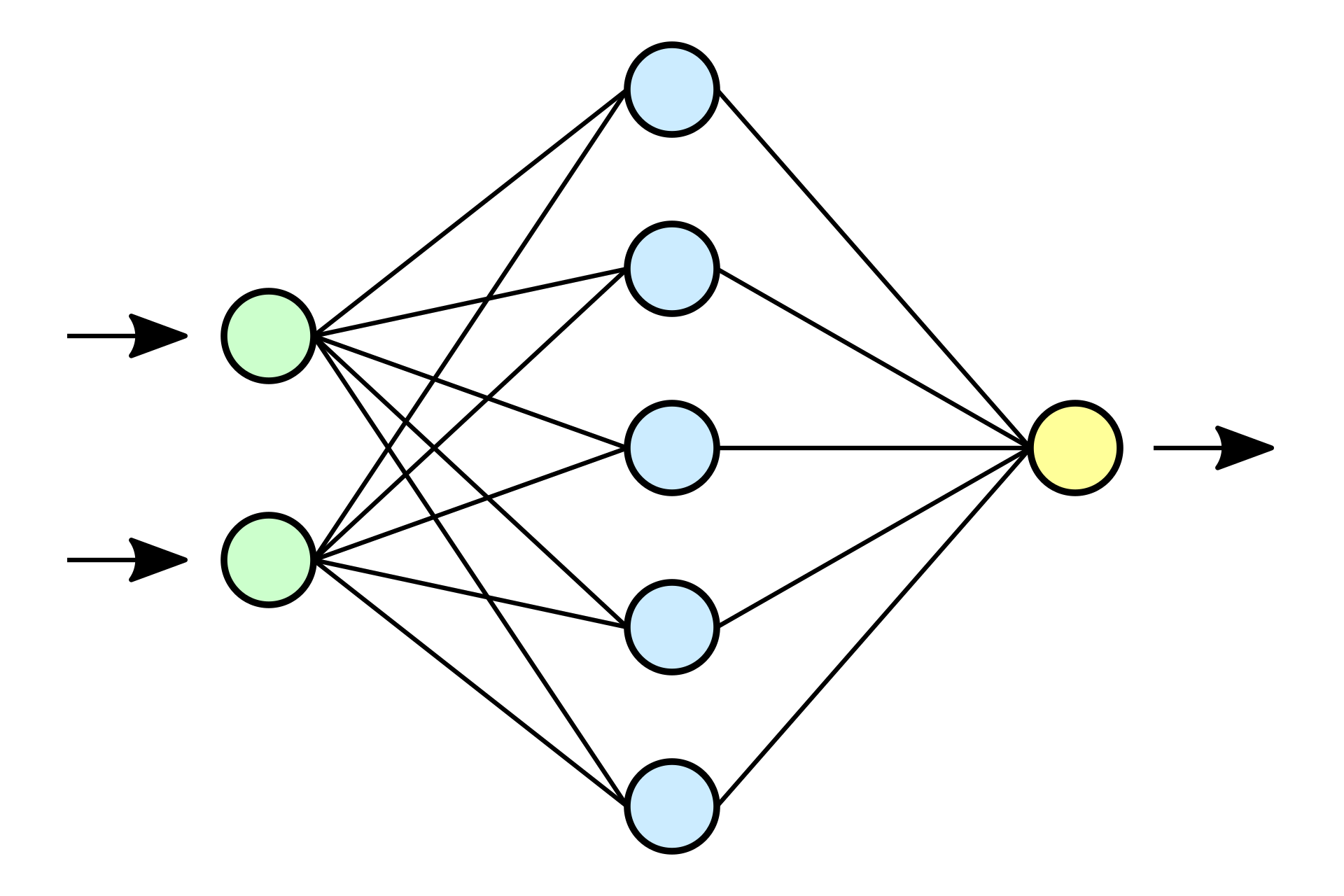

At the heart of a neural network is the neuron, a simple processing unit that receives input, performs calculations on that input, and produces an output. In a neural network, these neurons are organized into layers, with the input layer receiving the raw input data, one or more hidden layers performing intermediate calculations, and the output layer producing the final prediction or decision.

Each neuron in a layer is connected to the neurons in the previous and next layers, and these connections are assigned weights that determine the importance of the input received by each neuron. During training, these weights are adjusted to improve the performance of the neural network.

In a feedforward neural network, the information flows only in one direction, from the input layer through the hidden layers and to the output layer. This is the most common type of neural network and is used for tasks such as image and speech recognition.

Convolutional neural networks (CNNs) are a specialized type of neural network that are used for tasks involving two-dimensional input data, such as images. In a CNN, the neurons in the hidden layers are organized into two-dimensional grids, with each neuron receiving input from a local region of the input data. This allows the network to learn spatial hierarchies of features from the input data.

Recurrent neural networks (RNNs) are a type of neural network that can process sequential input data, such as text or time series data. In an RNN, the output of a neuron is not only determined by its input, but also by its previous state. This allows the network to capture temporal relationships in the input data and make predictions based on previous input.

In the next chapter, we will discuss how neural networks are trained to make predictions or decisions based on the input data.

Training Neural Networks

Training a neural network involves adjusting the weights of the connections between the neurons to minimize the error between the predicted output and the true output. This is typically done using an optimization algorithm, such as backpropagation or stochastic gradient descent, which adjusts the weights in small increments based on the error at each step.

Training begins with the input data and true output labels, and the weights of the connections are initially set to random values. The input is passed through the network, and the output is compared to the true output to calculate the error. The weights are then adjusted to reduce the error, and the process is repeated for multiple epochs, or iterations, until the error is minimized.

During training, it is important to split the dataset into a training set and a validation set. The training set is used to adjust the weights, while the validation set is used to evaluate the performance of the model and prevent overfitting. Overfitting occurs when the model performs well on the training set but poorly on new, unseen data, and it can be addressed by using regularization techniques or early stopping.

Applications of Neural Networks

Neural networks have a wide range of applications in various fields, including computer vision, natural language processing, and robotics. Some examples of these applications are:

- Image and speech recognition: Neural networks can be trained to recognize objects, scenes, and sounds in images and audio recordings. This allows them to perform tasks such as identifying faces in photographs or transcribing speech to text.

- Natural language processing: Neural networks can be used to process and understand human language, allowing them to perform tasks such as machine translation, sentiment analysis, and question answering.

- Self-driving cars: Neural networks can be used to make decisions and control a vehicle in a self-driving car. They can be trained to recognize objects and obstacles in the environment and navigate to a desired destination.

Challenges and Limitations of Neural Networks

Although neural networks have achieved impressive results in many applications, there are still challenges and limitations to their use. Some of these challenges and limitations include:

- The need for large amounts of training data: Neural networks typically require a large amount of labeled data in order to learn effectively. This can be difficult and time-consuming to obtain, especially for tasks involving rare or complex data.

- The potential for overfitting: As mentioned earlier, overfitting can occur when a neural network performs well on the training set but poorly on new, unseen data. This can be addressed by using regularization techniques or early stopping, but it can still be a challenge.

- Limited interpretability: Unlike some other machine learning algorithms, neural networks are often considered „black box“ models, meaning it is difficult to understand how they make decisions or predictions. This can make it challenging to trust the results of a neural network or to diagnose and correct problems with the model.

- AI chatbot ChatGPT - 23. Dezember 2022

- Introduction to Neural Networks - 22. Dezember 2022

- GPU-Beschleunigtes Machine Learning unter Windows Subsystem for Linux - 19. August 2020